1. Bayesian Linear Regression Starter#

Authors: Paul Bürkner, Lars Kühmichel, Stefan T. Radev

1.1. Introduction#

Welcome to the very first tutorial on using BayesFlow for amortized posterior estimation! In this notebook, we will estimate a linear regression model and illustrate some features of the library along the way. This tutorial introduces the lower-level interface, which allows full control over all network and training parameters, as well as passing of all familiar keras callback functions.

In traditional Bayesian inference, we seek to approximate the posterior distribution of model parameters given observed data for each new data instance separately. This process can be computationally expensive, especially for complex models or large datasets, because it often involves iterative optimization or sampling methods. This step needs to be repeated for each new instance of data.

Amortized Bayesian inference offers a solution to this problem. “Amortization” here refers to spreading out the computational cost over multiple instances. Instead of computing a new posterior from scratch for each data instance, amortized inference learns a function. This function is parameterized by a neural network that directly maps observations to an approximate posterior. This function is trained over the dataset to approximate the posterior for any new data instance efficiently. In this example, we will use a simple regression model to illustrate the basic concepts of amortized posterior estimation.

import os

if not os.environ.get("KERAS_BACKEND"):

# Set to your favorite backend

os.environ["KERAS_BACKEND"] = "tensorflow"

import numpy as np

from pathlib import Path

import keras

import bayesflow as bf

INFO:bayesflow:Using backend 'tensorflow'

BayesFlow offers flexible modules you can adapt to different Amortized Bayesian Inference (ABI) workflows. In brief:

The module

simulatorscontains high-level wrappers for gluing together priors, simulators, and meta-functions, and generating all quantities of interest for a modeling scenario.The module

adapterscontains utilities that preprocess the generated data from the simulator to a format more friendly for the neural approximators.The module

networkscontains the core neural architecture used for various tasks, e.g., a generativeFlowMatchingarchitecture for approximating distributions, or aSetTransformerfor learning permutation-invariant summary representations (embeddings).The module

appoximatorscontains high-level wrappers which connect the various networks together and instruct them about their particular goals in the inference pipeline.

In this notebook we will take components from each of these modules and show how they work together.

# avoid scientific notation for outputs

np.set_printoptions(suppress=True)

1.2. Generative Model#

From the perspective of BayesFlow, a generative model is more than just a prior (encoding beliefs about the parameters before observing data) and a data simulator (a likelihood function, often implicit, that generates data given parameters).

In addition, a model consists of various implicit context assumptions, which we can make explicit at any time. Furthermore, we can also amortize over these context variables, thus making our real-world inference more flexible (i.e., applicable to more contexts). We are leveraging the concept of amortized inference and extending it to context variables as well.

We will start with our data simulator:

def likelihood(beta, sigma, N):

# x: predictor variable

x = np.random.normal(0, 1, size=N)

# y: response variable

y = np.random.normal(beta[0] + beta[1] * x, sigma, size=N)

return dict(y=y, x=x)

As inputs, it takes the regression coefficient vector beta (intercept and slope), the residual SD sigma, and the number of observations N to simulate both our predictor variable x and subsequently our response variable y.

data_draws = likelihood(beta = [2, 1], sigma = 1, N = 3)

print(data_draws["y"].shape)

print(data_draws["y"])

(3,)

[1.47871691 1.98283522 2.87658279]

Next, we define our prior simulator to sample draws of the model parameters beta and sigma:

def prior():

# beta: regression coefficients (intercept, slope)

beta = np.random.normal([2, 0], [3, 1])

# sigma: residual standard deviation

sigma = np.random.gamma(1, 1)

return dict(beta=beta, sigma=sigma)

prior_draws = prior()

print(prior_draws["beta"].shape)

print(prior_draws["beta"])

(2,)

[-0.5259126 -1.68161681]

If we fix the number of observations N, the combination of likelihood and prior already fully defines our model simulator. However, we want to train BayesFlow to perform posterior approximations of linear regression models for varying number of observations. As such, we also need a simulator for N:

def meta():

# N: number of observation in a dataset

N = np.random.randint(5, 15)

return dict(N=N)

meta_draws = meta()

print(meta_draws["N"])

10

We can combine these three functions into a bayesflow simulator via:

simulator = bf.simulators.make_simulator([prior, likelihood], meta_fn=meta)

We passed the meta simulator separately to the meta_fn argument to make sure

that the number of observations N constant within each batch of simulated datasets. This is required since, within each batch, the generated datasets need to have the same shape for them to be easily transformable to tensors for deep learning.

Let’s see how sampling from the simulator works by sampling a batch of 500 datasets:

# Generate a batch of three training samples

sim_draws = simulator.sample(500)

print(sim_draws["N"])

print(sim_draws["beta"].shape)

print(sim_draws["sigma"].shape)

print(sim_draws["x"].shape)

print(sim_draws["y"].shape)

10

(500, 2)

(500, 1)

(500, 10)

(500, 10)

Let’s define the parameter key and corresponding names of individual parameters for easy filtering and plotting down the line.

par_keys = ["beta", "sigma"]

par_names = [r"$\beta_0$", r"$\beta_1$", r"$\sigma$"]

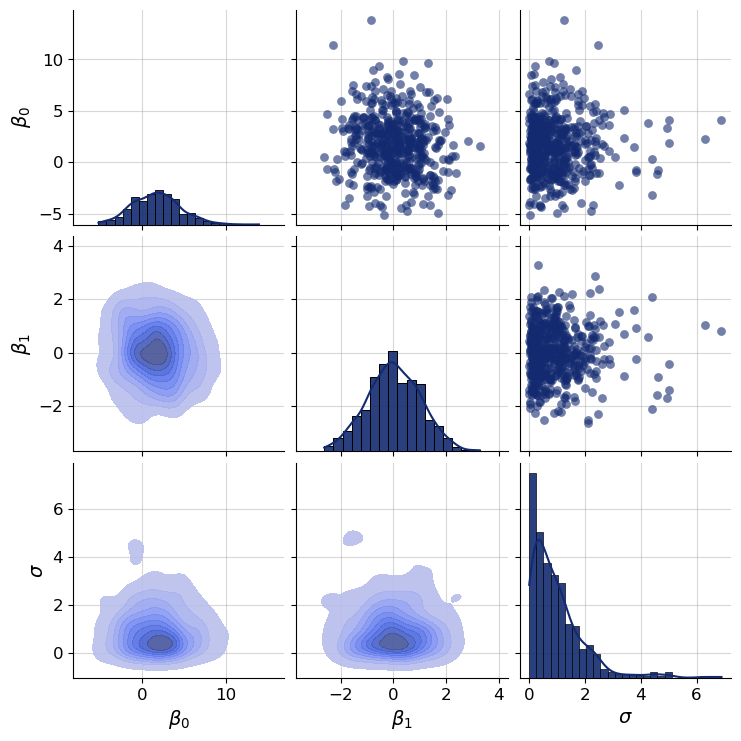

f = bf.diagnostics.plots.pairs_samples(

samples=sim_draws,

variable_keys=par_keys,

variable_names=par_names

)

1.3. Adapter#

To ensure that the training data generated by the simulator can be used for deep learning, we have do a bunch of transformations via adapter objects. They provides multiple flexible functionalities, from standardization to renaming, and so on.

Below, we build our own adapter from scratch but later on, BayesFlow will also provide default adapters that will already automate most of the commonly required steps.

adapter = (

bf.Adapter()

.broadcast("N", to="x")

.as_set(["x", "y"])

.constrain("sigma", lower=0)

.sqrt("N")

.convert_dtype("float64", "float32")

.concatenate(["beta", "sigma"], into="inference_variables")

.concatenate(["x", "y"], into="summary_variables")

.rename("N", "inference_conditions")

)

Let us discuss a few adapter steps in detail:

The .broadcast("N", to="x") transform will copy the value of N batch-size times to ensure that it will also have a batch_size dimension even though it was actually just a single value, constant over all datasets within a batch. The batch dimension will be inferred from x (this needs to be present during inference).

The .as_set(["x", "y"]) transform indicates that both x and y are treated as sets. That is, their values will be treated as exchangable such that they will imply the same inference regardless of the values’ order. This makes sense, since in linear regression, we can index the observations in arbitrary order and always get the same regression line.

The .constrain("sigma", lower=0) transform ensures that the residual standard deviation parameter sigma will always be positive. Without this constrain, the neural networks may attempt to predict negative sigma which of course would not make much sense.

Let’s check the shape of our processed data to be passed to the neural networks:

processed_draws = adapter(sim_draws)

print(processed_draws["summary_variables"].shape)

print(processed_draws["inference_conditions"].shape)

print(processed_draws["inference_variables"].shape)

(500, 10, 2)

(500, 1)

(500, 3)

Those shapes are as we expect them to be. The first dimenstion is always the batch size which was 500 for our example data. All variables adhere to this rule since the first dimension is indeed 500.

For summary_variables, the second dimension is equal to the sampled valued of N. It’s third dimension is 2, since we have combined x and y into summary variables, each of which are vectors of length N within each simulated dataset.

For inference_conditions, the second dimension is just 1 because we have passed only the scalar variable N there.

For inference_variables, the second dimension is 3 because it consists of

beta (a vector of length 2) and sigma (a scalar).

1.4. Neural Approximator#

Below, we will define the neural networks that we will use to estimate the posterior distribution of our linear regression parameters. At a high level, our architecture consists of a summary network \(\mathbf{h}\) and an inference network \(\mathbf{f}\) which jointly learn to invert a generative model. The summary network transforms input data \(\mathbf{x}\) of potentially variable size to a fixed-length representations. The inference network generates random draws from an approximate posterior \(\mathbf{q}\) via a conditional generative networks (here, an invertible network).

1.4.1. Summary Network#

Since our likelihood generates data exchangeably, we need to respect the permutation invariance of the data. Exchangeability in data means that the probability distribution of a sequence of observations remains the same regardless of the order in which the observations appear. In other words, the data is permutation invariant. For that, we will use a SetTransformer which does exactly that (a DeepSet is a cheaper version for simpler problems).

This network will take (at least) 3D tensors of shape (batch_size, num_obs, D) and reduce them to 2D tensors of shape (batch_size, summary_dim), where summary_dim is a hyperparameter to be set by the user (you). Heuristically, this number should not be lower than the number of parameters in a model. Below, we create a permutation-invariant network with summary_dim = 10:

summary_network = bf.networks.SetTransformer(summary_dim=10)

1.4.2. Inference Network#

To actually approximate the posterior distribution, we need to define a generative neural network. Here we choose a simple coupling flow network. For more complicated models and corresponding posteriors, we may need to choose a different, more flexible generative network, for example bf.networks.FlowMatching().

inference_network = bf.networks.CouplingFlow(transform="spline")

1.4.3. Workflow#

Workflow objects make life easier by combining inference networks and summary networks into approximators (internally).

workflow = bf.BasicWorkflow(

simulator=simulator,

adapter=adapter,

inference_network=inference_network,

summary_network=summary_network,

standardize=["inference_variables", "summary_variables"]

)

Now, we are ready to train our approximator to learn posterior distributions for linear regression models. To achieve this, we will call approximator.fit passing the simulator and a bunch of hyperparameters that control how long we want to train.

Note: when using JAX and the shape of your data differs in every batch (e.g., when observations vary), you will observe some compilation overhead during the first few steps. The total training time for this example is between 2-5 minutes on a standard laptop.

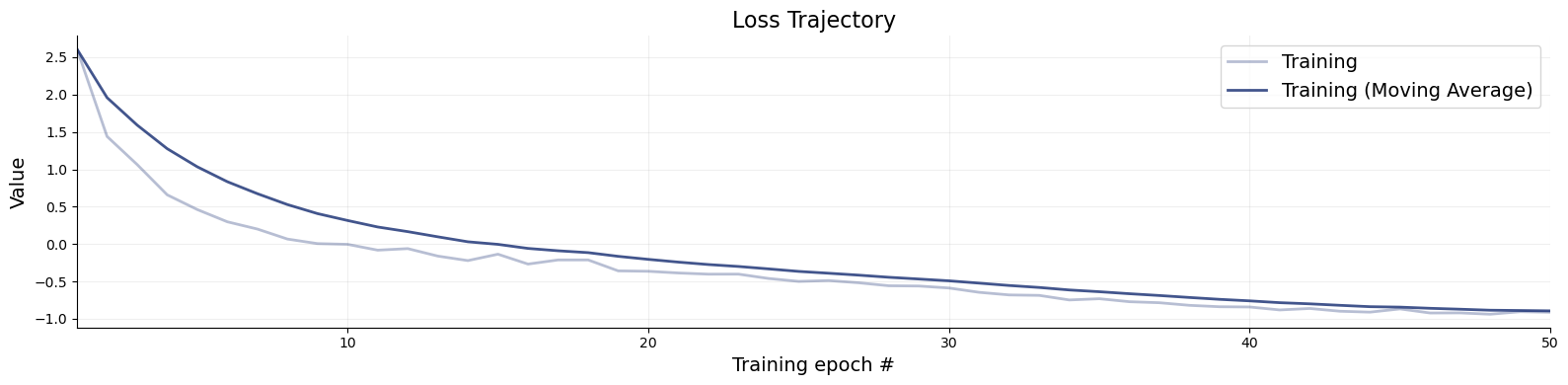

history = workflow.fit_online(epochs=50, batch_size=64, num_batches_per_epoch=200)

INFO:bayesflow:Fitting on dataset instance of OnlineDataset.

INFO:bayesflow:Building on a test batch.

Epoch 1/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 35s 50ms/step - loss: 2.6087

Epoch 2/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 11s 55ms/step - loss: 1.4402

Epoch 3/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 11s 57ms/step - loss: 1.0645

Epoch 4/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 11s 56ms/step - loss: 0.6592

Epoch 5/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 11s 57ms/step - loss: 0.4623

Epoch 6/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 59ms/step - loss: 0.2985

Epoch 7/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 62ms/step - loss: 0.2013

Epoch 8/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 58ms/step - loss: 0.0674

Epoch 9/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 11s 56ms/step - loss: 0.0066

Epoch 10/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 11s 57ms/step - loss: -0.0032

Epoch 11/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 59ms/step - loss: -0.0811

Epoch 12/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 58ms/step - loss: -0.0604

Epoch 13/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 59ms/step - loss: -0.1603

Epoch 14/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 13s 63ms/step - loss: -0.2205

Epoch 15/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 13s 64ms/step - loss: -0.1351

Epoch 16/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 13s 64ms/step - loss: -0.2672

Epoch 17/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 62ms/step - loss: -0.2118

Epoch 18/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 59ms/step - loss: -0.2116

Epoch 19/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 61ms/step - loss: -0.3582

Epoch 20/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 13s 63ms/step - loss: -0.3634

Epoch 21/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 11s 55ms/step - loss: -0.3865

Epoch 22/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 58ms/step - loss: -0.4023

Epoch 23/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 58ms/step - loss: -0.4019

Epoch 24/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 60ms/step - loss: -0.4600

Epoch 25/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 61ms/step - loss: -0.4990

Epoch 26/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 59ms/step - loss: -0.4880

Epoch 27/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 59ms/step - loss: -0.5177

Epoch 28/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 58ms/step - loss: -0.5574

Epoch 29/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 58ms/step - loss: -0.5610

Epoch 30/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 59ms/step - loss: -0.5873

Epoch 31/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 58ms/step - loss: -0.6450

Epoch 32/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 13s 63ms/step - loss: -0.6801

Epoch 33/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 13s 63ms/step - loss: -0.6861

Epoch 34/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 60ms/step - loss: -0.7473

Epoch 35/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 60ms/step - loss: -0.7308

Epoch 36/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 60ms/step - loss: -0.7707

Epoch 37/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 62ms/step - loss: -0.7844

Epoch 38/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 58ms/step - loss: -0.8192

Epoch 39/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 61ms/step - loss: -0.8380

Epoch 40/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 60ms/step - loss: -0.8412

Epoch 41/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 13s 64ms/step - loss: -0.8807

Epoch 42/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 60ms/step - loss: -0.8611

Epoch 43/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 11s 57ms/step - loss: -0.8980

Epoch 44/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 58ms/step - loss: -0.9102

Epoch 45/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 58ms/step - loss: -0.8669

Epoch 46/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 59ms/step - loss: -0.9216

Epoch 47/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 58ms/step - loss: -0.9203

Epoch 48/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 59ms/step - loss: -0.9381

Epoch 49/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 12s 58ms/step - loss: -0.9042

Epoch 50/50

200/200 ━━━━━━━━━━━━━━━━━━━━ 11s 57ms/step - loss: -0.9118

f = bf.diagnostics.plots.loss(history)

1.5. Diagnostics#

Let’s check out the resulting inference. Say we want to obtain 1000 posterior samples from our approximated posterior of a simulated dataset where we know the ground truth values.

You can also explore the automated diagnostics available through the methods:

compute_default_diagnostics()plot_default_diagnostics()

# Set the number of posterior draws you want to get

num_samples = 1000

# Simulate validation data (unseen during training)

val_sims = simulator.sample(200)

# Obtain num_samples samples of the parameter posterior for every validation dataset

post_draws = workflow.sample(conditions=val_sims, num_samples=num_samples)

# post_draws is a dictionary of draws with one element per named parameters

post_draws.keys()

dict_keys(['beta', 'sigma'])

print(post_draws["beta"].shape)

(200, 1000, 2)

Initial sanity checks of the posterior samples look good. post_draws["beta"] has shape (200, 1000, 2) which makes sense since we asked for inference of a 200 data sets (first dimension is 200), for which we wanted to generated 1000 posterior samples (second dimension is 1000). The third dimension is 2, since the beta variable was defined as a vector of length 2 (intercept and slope).

post_draws["sigma"].min()

5.0977684069041934e-05

The minimun posterior sample of sigma is positive indicating that our positivity enforcing constraing in the data adapter has indeed worked.

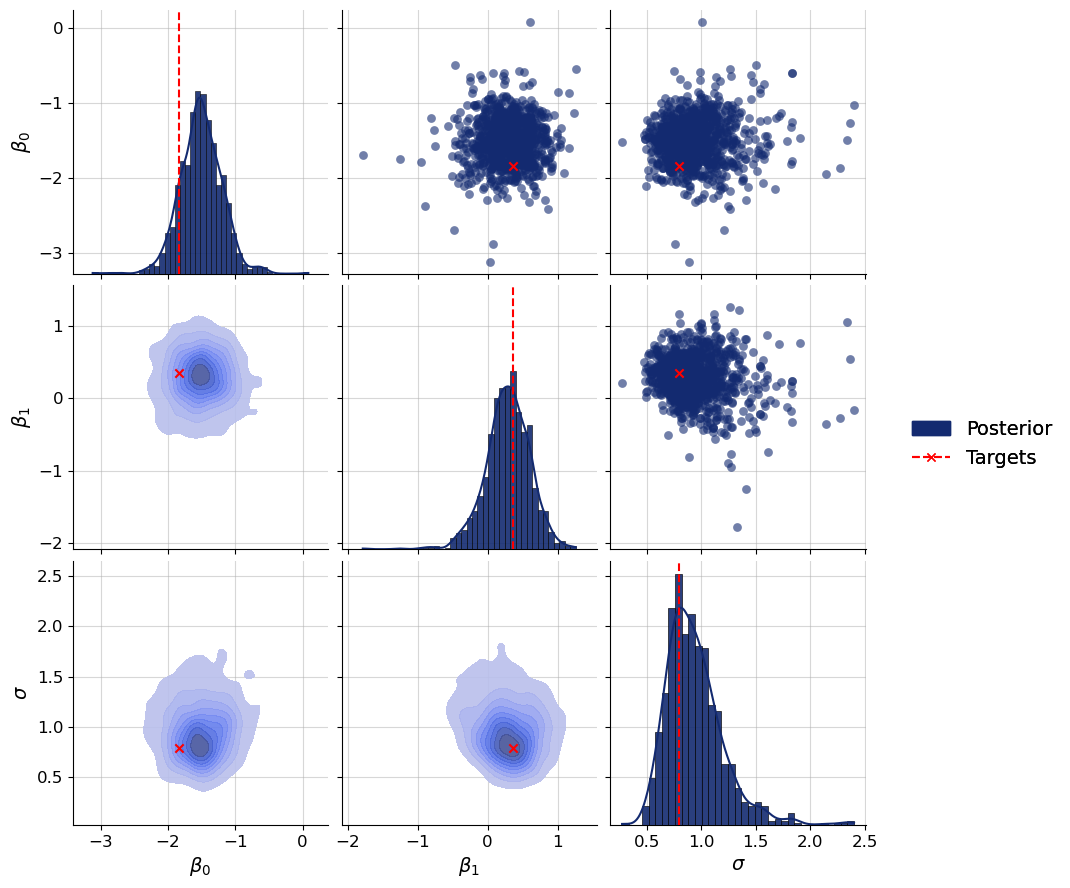

Let’s plot the joint posterior distribution of beta (both intercept and slope). Based on how we generated this particular dataset, we would expect the posteriors of beta to vary around its true values from val_sims["beta"]. Of course, if this was real data, we wouldn’t know the ground truth values. Still, it is always a good idea to first perform inference on simulated data as a diagnostic for whether the approximator has learned to approximate the true posteriors well enough. We will examplariy check the posterior of the first dataset:

f = bf.diagnostics.plots.pairs_posterior(

estimates=post_draws,

targets=val_sims,

dataset_id=0,

variable_names=par_names,

)

The true parameter values of the first dataset are indeed well covered by the posterior. Let’s check this more systematically for all validation datasets:

Note: We are validating recovery for a given \(N\) that happened to be drawn in the validation phase. Naturally, results will vary for different \(N\), but you can simply leverage the power of amortization to check recovery over the entire range of plausble observation numbers. For an actual scientific application, you would want to train longer.

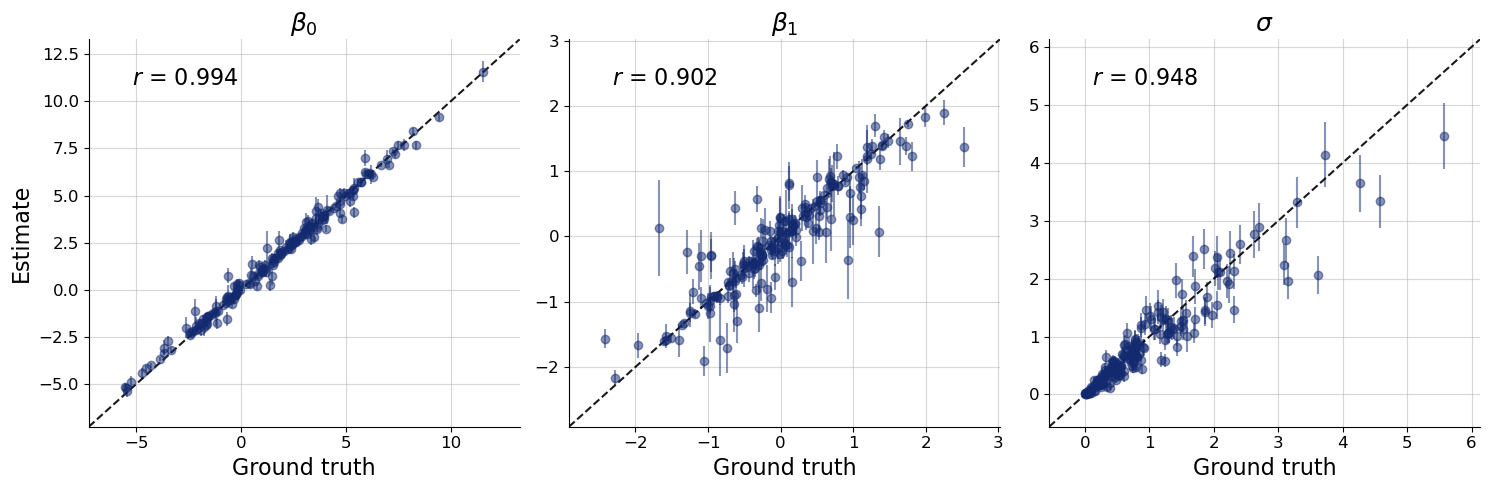

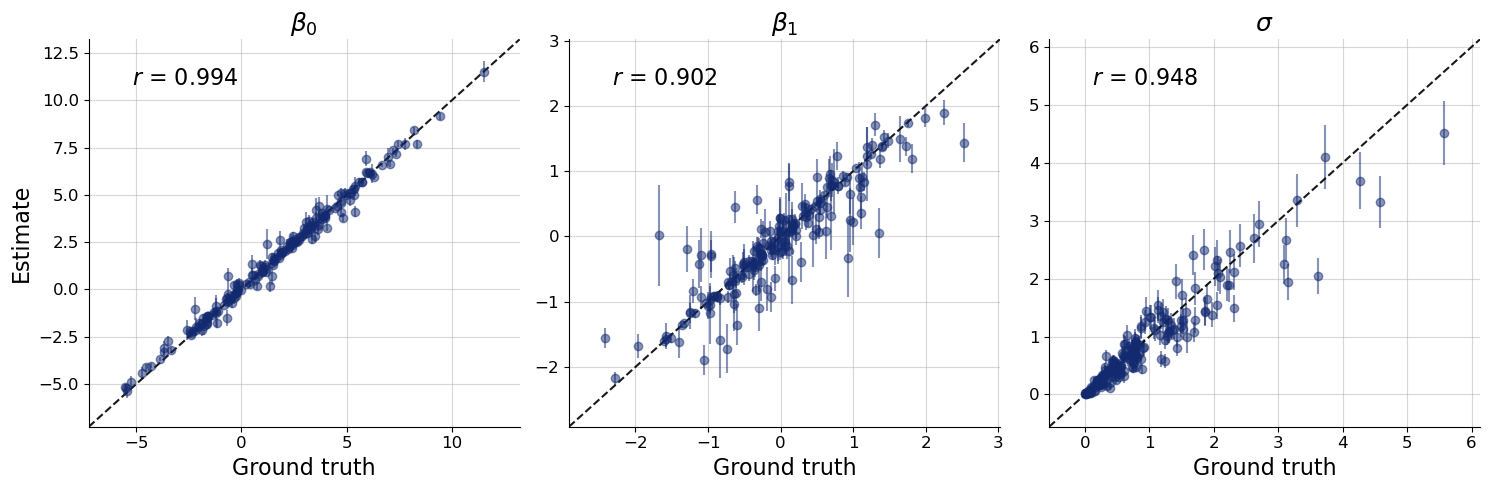

f = bf.diagnostics.plots.recovery(

estimates=post_draws,

targets=val_sims,

variable_names=par_names

)

Accuracy looks good for most datasets There is some more variation especially for \(\beta_1\) and \(\sigma\) but this is not necessarily a reason for concern. Keep in mind that perfect accuracy is not the goal of bayesflow inference. Rather, the goal is to estimate the correct posterior as close as possible. And this correct posterior might very well be far away from the true value for some datasets. In fact, we would fully expect the true value to sometimes be at the tail of the posterior.

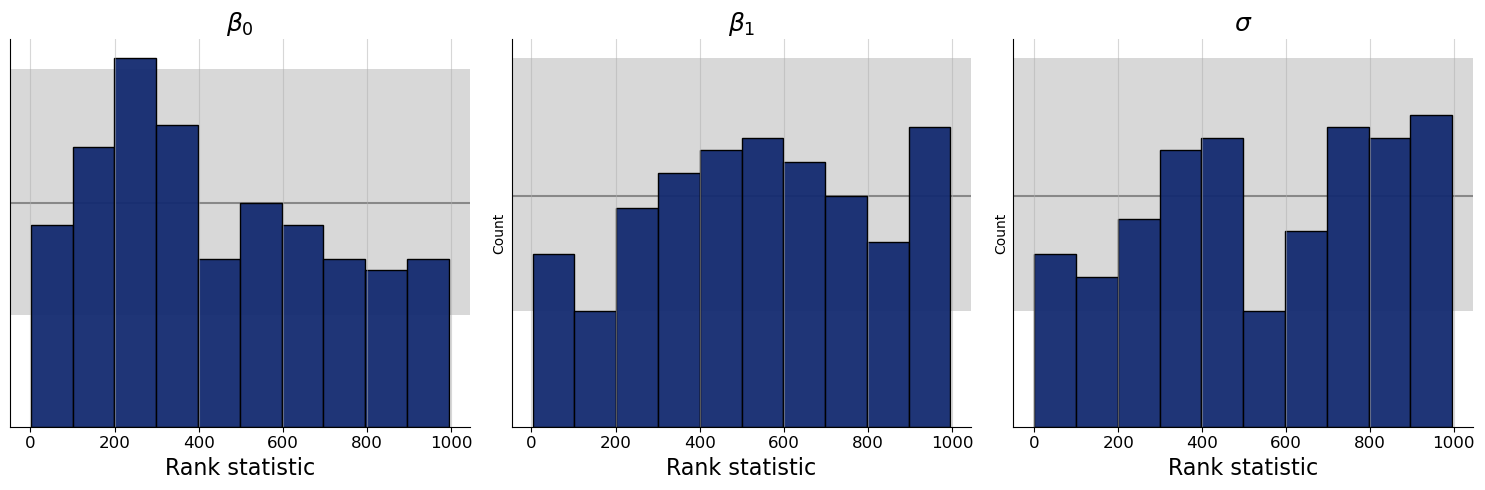

If this was not the case, than our posterior approximation may be too wide. Unfortunately, in many cases we don’t have access to the correct posterior, so we need a method that provides us with an indication of the posterior approximations’ accuracy without. This is where simulation-based calibration (SBC) comes into play. In short, if the true values are simulated from the prior used during inference (as is the case for our validatian data above), We would expect the rank of the true parameter value to be uniformly distributed from 1 to num_samples.

There are multiple graphical methods that use this property for diagnostics. For example, we can use histograms together with an uncertainty band within which we would expect the histogram bars to be if the rank statistics were indeed uniform.

f = bf.diagnostics.plots.calibration_histogram(

estimates=post_draws,

targets=val_sims,

variable_names=par_names

)

WARNING:bayesflow:The ratio of simulations / posterior draws should be > 20 for reliable variance reduction, but your ratio is 0. Confidence intervals might be unreliable!

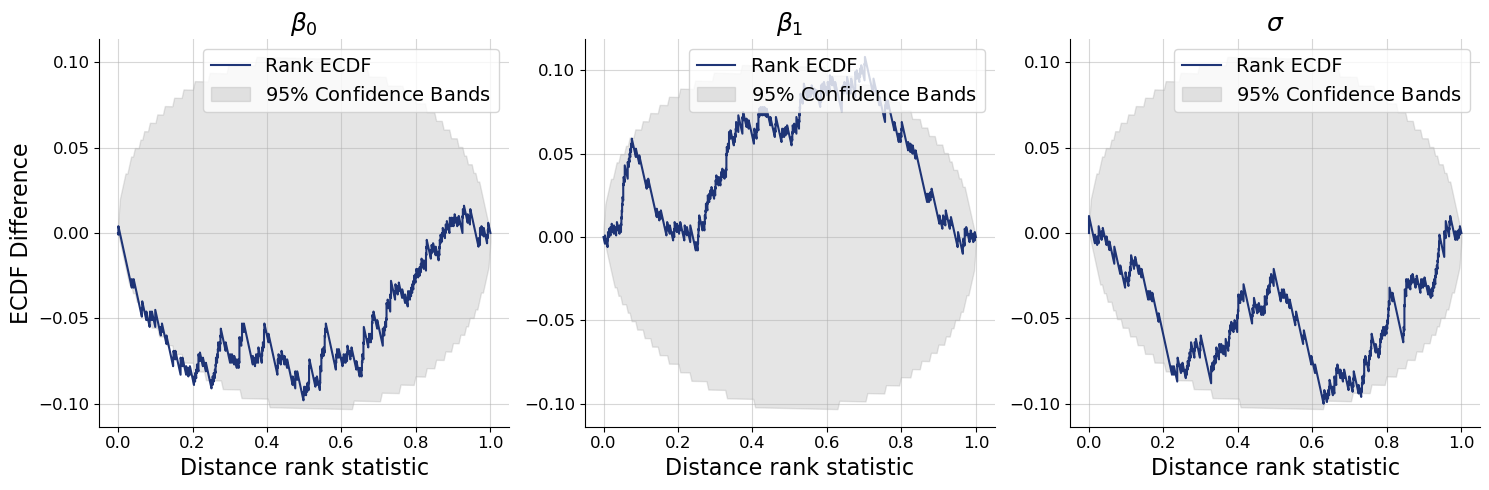

The histograms look quite good overall, but could be a bit more uniform. That said, the SBC histograms have some drawbacks on how the confidence bands are computed, so we recommend using another kind of plot that is based on the empirical cumulative distribution function (ECDF). For the ECDF, we can compute better confidence bands than for histograms, so the SBC ECDF plot is usually preferable. This SBC interpretation guide by Martin Modrák gives further background information and also practical examples of how to interpret the SBC plots.

f = bf.diagnostics.plots.calibration_ecdf(

estimates=post_draws,

targets=val_sims,

variable_names=par_names,

difference=True,

rank_type="distance"

)

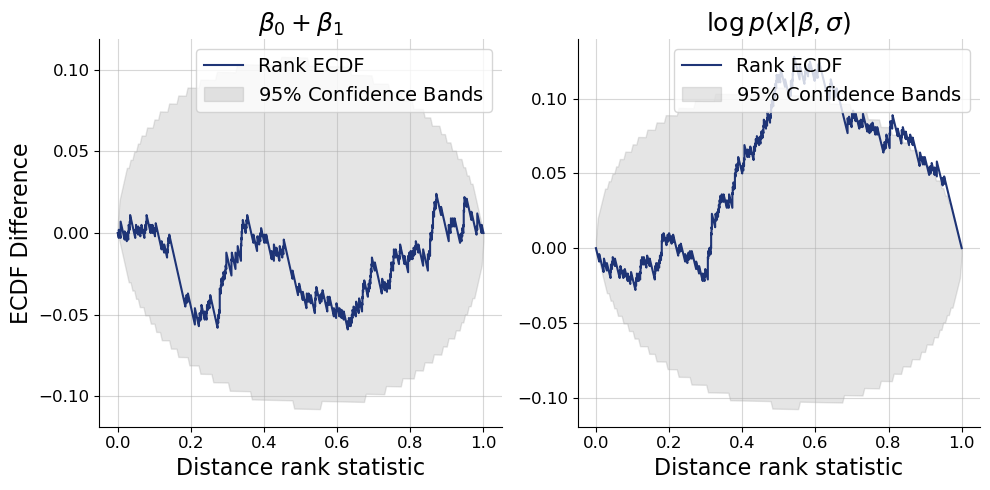

1.5.1. Custom Test Quantities for SBC#

By default, Bayesflow’s SBC diagnostics are only checking the calibration of the parameters directly. However, in principle, we can check every univariate quantity that is a function of parameters. This even includes data-dependent quantities. Checking SBC for data-dependent quantities is recommended because it can uncover many problems with the posterior approximation that purely parameter SBC cannot.

One powerful data-dependent test quantity is the joint log likelihood. Of course, it is only available in models that emit an analytic likelihood. But since we are performing ABI for linear regression here, we can use it in this case study. For other models without an anlytic likelihood, alternative test quantities can be considered, for example, predictive RMSEs or something more specific to the model at hand. Regardless of which quantity is encoded, the correponding function always follows the same logic: We input a input dictionary with numpy array elements that each have the same (first) batch dimension. As output, the function needs to supply a one-dimensional array of batch size length.

def joint_log_likelihood(data):

beta = data["beta"]

sigma = data["sigma"]

x = data["x"]

y = data["y"]

mean = beta[:,0][:,None] + beta[:,1][:,None] * x

log_lik = np.sum(- (y - mean)**2 / (2 * sigma**2) - 0.5 * np.log(2 * np.pi) - np.log(sigma), axis=-1)

return log_lik

joint_log_likelihood(val_sims).shape

(200,)

We sampled num_samples samples from the posterior for each condition (data). Since we want to pass posterior samples and conditions to a test quantity function that depends on both of them, we will repeat the conditions to adhere to the same shape.

val_sims_conditions = val_sims.copy()

val_sims_conditions.pop("beta")

val_sims_conditions.pop("sigma")

# N is only necessary for simulating data. It is not necessary to keep it for our test quantity.

val_sims_conditions.pop("N")

val_sims_conditions = keras.tree.map_structure(

lambda tensor: np.repeat(np.expand_dims(tensor, axis=1), num_samples, axis=1),

val_sims_conditions

)

post_draws_conditions = post_draws | val_sims_conditions

keras.tree.map_structure(keras.ops.shape, post_draws_conditions)

{'beta': (200, 1000, 2),

'sigma': (200, 1000, 1),

'y': (200, 1000, 10),

'x': (200, 1000, 10)}

Now that we have a batched function for the test quantity, as well as samples in the correct shapes to apply to them to, we can make an extended calibration checking plot. In addition to the joint_log_likelihood as test quantity, we will also use the sum of \(\beta_0\) and \(\beta_1\) as an

additional (non-data dependent) test quantity just to showcase the flexibility of the feature.

f = bf.diagnostics.plots.calibration_ecdf(

estimates=post_draws_conditions,

targets=val_sims,

test_quantities={ # this argument is new

r"$\beta_0 + \beta_1$": lambda data: np.sum(data["beta"], axis=-1),

r"$\log p(x|\beta,\sigma)$": joint_log_likelihood,

},

variable_keys=[], # we just want to show SBC for the custom test quantities

difference=True,

rank_type="distance"

)

The plots confirm that the approximate posteriors are overall well calibrated, even the joint log likelihood almost is. In fact, posterior approximations have to be highly accurate to ensure good calibration of the joint log likelihood. If, for example, we would have used an affine flow as inference network instead of a spline flow, we would have seen stronger miscalibration in the joint log likelihood but not in the parameters. Try it out as an exercise.

1.5.2. Checking Posterior Concentration#

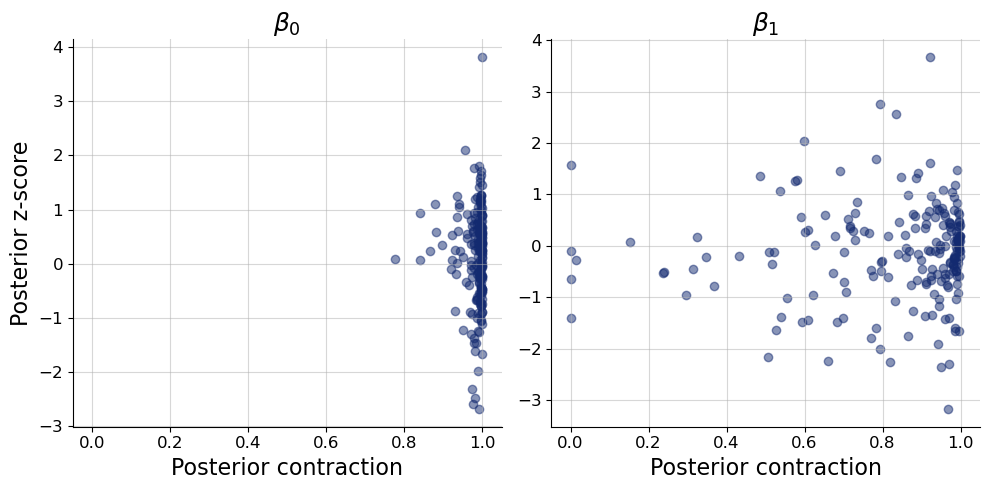

After having convinced us that the posterior approximation are overall reasonable, we can check how much and what kind of information in the data we encode in the posterior. Specifically, we might want to look at two interesting scores: (a) The posterior contraction, which measures how much smaller the posterior variance is relative to the prior variance (higher values indicate more contraction relative to the prior). (b) The posterior z-score which indicates the standardized difference between the posterior mean and the true parameter value. Since the posterior z-score requires the true parameter values, it can only be computed in simulated data settings. Here, we show the results for the \(\beta\) coefficients only to illustrate the use of the variable_keys argument.

f = bf.diagnostics.plots.z_score_contraction(

estimates=post_draws,

targets=val_sims,

variable_keys=["beta"],

variable_names=par_names[0:2]

)

We clearly see strong posterior contraction in almost all posteriors of \(\beta_0\) and in most posteriors of \(\beta_1\). In the latter case, there are some notable exceptions where little learning from prior to posterior has taken place. Most likely this is because the variance of the sample predictor value \(x\) was small, leading to reduced information about the slope \(\beta_1\).

In terms of posterior z-score, most estimates are between -2 and 2, which makes sense if our posterior is approximately normal and well calibrated. However, again, there are some notable exceptions with quite large posterior z-scores over greater than 3 in absolute values. These may be cases, where the learned posterior approximation was not yet fully accurate. So likely, these extreme cases would vanish if we trained our approximator a little longer.

1.5.3. Saving and Loading the Trained Networks#

For saving and later on reloading bayesflow approximators, we provide some convenient functionalities. For saving, we use:

# Recommended - full serialization (checkpoints folder must exist)

filepath = Path("checkpoints") / "regression.keras"

filepath.parent.mkdir(exist_ok=True)

workflow.approximator.save(filepath=filepath)

# Not recommended due to adapter mismatches - weights only

# approximator.save_weights(filepath="checkpoints/regression.h5")

For loading, we then use:

# Load approximator

approximator = keras.saving.load_model(filepath)

All the usual methods continue to work on the loaded approximator. For example:

# Again, obtain num_samples samples of the parameter posterior for every validation dataset

post_draws = approximator.sample(conditions=val_sims, num_samples=num_samples)

f = bf.diagnostics.plots.recovery(

estimates=post_draws,

targets=val_sims,

variable_names=par_names

)