7. Simple Model Comparison - One Sample T-Test#

Author: Šimon Kucharský

In this notebook, we will show how to do a simple model comparison in BayesFlow amortized over the number of observations.

Amortized Bayesian model comparison leverages neural networks to learn a mapping from data to posterior model probabilities, effectively bypassing the need for costly inference procedures for each new dataset.

The method is particularly useful in scenarios where model evaluation needs to be performed repeatedly, as the inference cost is front-loaded into the training phase, enabling rapid comparisons at test time.

import os

if "KERAS_BACKEND" not in os.environ:

# set this to "torch", "tensorflow", or "jax"

os.environ["KERAS_BACKEND"] = "tensorflow"

INFO:bayesflow:Using backend 'tensorflow'

import numpy as np

import keras

import bayesflow as bf

7.1. Simulator#

First we define our simulators, one for every model that we want to compare. In this notebook, we will use two simple models

Each model has their own prior but the likelihood (that is responsible for simulating the data \(x\)) remains the same for both models.

Once we define the two simulators, we wrap them in one overarching simulator that simulates from both models at the same time, for the purpose of training the networks from either of the models. Our context variable \(n\) is the sample size that we amortize over as is used as a shared simulator for both models, so that data within each simulated batch can consist of simulations from either model, buth with the same sample size.

def context(batch_shape, n=None):

if n is None:

n = np.random.randint(20, 30)

return dict(n=n)

def prior_null():

return dict(mu=0.0)

def prior_alternative():

mu = np.random.normal(loc=0, scale=1)

return dict(mu=mu)

def likelihood(n, mu):

x = np.random.normal(loc=mu, scale=1, size=n)

return dict(x=x)

simulator_null = bf.make_simulator([prior_null, likelihood])

simulator_alternative = bf.make_simulator([prior_alternative, likelihood])

simulator = bf.simulators.ModelComparisonSimulator(

simulators=[simulator_null, simulator_alternative],

use_mixed_batches=True,

shared_simulator=context,

)

Now we can simulate some data to see what the simulator produces.

data = simulator.sample(100)

print("n =", data["n"])

for key, value in data.items():

print(key + " shape:", np.array(value).shape)

n = 20

n shape: ()

mu shape: (100, 1)

x shape: (100, 20)

model_indices shape: (100, 2)

Notice that the simulator automaticall adds model_indices, which indicates which data was generated which model (it is one-hot encoded, therefore will have as many columns as models).

The size of the observations x depends on the context variable n.

7.2. Defining the neural networks#

To ensure that the training data generated by the simulator can be used for deep learning, we have to do some transformations that will put the data into necessary structures. Here, we will define an adapter that takes the data and transforms the input.

adapter = (

bf.Adapter()

.sqrt("n")

.broadcast("n", to="x")

.as_set("x")

.rename("n", "classifier_conditions")

.rename("x", "summary_variables")

.convert_dtype("float64", "float32")

)

Here is what the adapter is doing:

.broadcast("n", to="x")copies the value ofnbatch_sizetimes to ensure that it will also have a dimension(batch_size,)even though during simulations it was just a single value that is constant over all simulations within the batch. The batch size is inferred from the shape ofx..as_set("n")indicates thatxis treated as a set. Their values will be treated as exchangeable, meaning that the inference will be the same regardless of their order..rename("n", "classifier_conditions")to make an inference for model comparison, we need to know the sample size. Here, we make sure thatnis passed directly into the classification network as its condition..rename("x", "summary_variables")the dataxwill be passed through a summary network. The output of the summary network will be automatically concatenated withclassifier_conditionsbefore passing them together into the classification network.

We can now apply the adapter to simulated data to see the results

processed_data = adapter(data)

for key, value in processed_data.items():

print(key + " shape:", value.shape)

mu shape: (100, 1)

model_indices shape: (100, 2)

classifier_conditions shape: (100, 1)

summary_variables shape: (100, 20, 1)

All those shapes are correct now. To summarise, we have

model_indices: The indicators of which model produced the data (one-hot encoded)classifier_conditions:nrepeatedbatch_sizetimessummary_variables: The observationsxwith shape(100, n, 1). The last axis is added byas_setsuch that it can be passed into a summary network

Now we can finally define our networks.

As a summary network, we will use a simple deep set architecture which is appropriate for summarising exchangeable data.

As a classification network, we will use a simple multilayer perceptron with 4 hidden layers, each with 32 neurons and SiLU activation function.

summary_network = bf.networks.DeepSet(summary_dim=8, dropout=None)

classifier_network = bf.networks.MLP(widths=[32] * 4, activation="silu", dropout=None)

We can now define our approximator consisting of the two networks and the adapter defined above.

approximator = bf.approximators.ModelComparisonApproximator(

num_models=2,

classifier_network=classifier_network,

summary_network=summary_network,

adapter=adapter,

standardize="summary_variables"

)

7.3. Training#

Now we are ready to train our approximator that will alow us to compare the two models.

To achieve this, we will define a bunch of parameters that indicate how long and on how much data we want the train the models.

num_batches_per_epoch = 256

batch_size = 128

epochs = 10

Then, we define our optimizer, and specify a schedule for its learning rate. In here, we use a CosineDecay schedule for Adam optimizer.

learning_rate = keras.optimizers.schedules.CosineDecay(

initial_learning_rate=1e-4,

decay_steps=epochs * num_batches_per_epoch

)

optimizer = keras.optimizers.Adam(learning_rate=learning_rate)

approximator.compile(optimizer=optimizer)

And we can fit the networks now!

history = approximator.fit(

epochs=epochs,

num_batches=num_batches_per_epoch,

batch_size=batch_size,

simulator=simulator,

adapter=adapter,

)

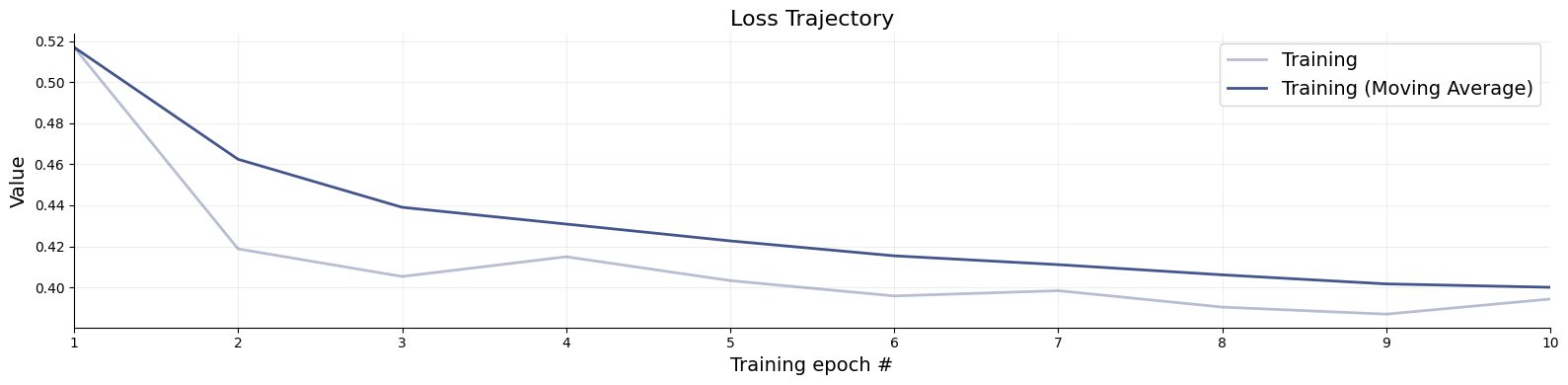

Once training has finished, we can visualize the losses.

f = bf.diagnostics.plots.loss(history=history)

7.4. Validation#

Once we have trained the model, we can inspect whether the classifier does what we expect it to do. Here, we will generate a validation dataset which has been fixed to generate data of sample size \(n=10\).

df = simulator.sample(5000, n=10)

print(f"{df['n']=}")

print(f"{df['x'].shape=}")

df['n']=10

df['x'].shape=(5000, 10)

To apply our approximator on this dataset, we simply use the .predict method to obtain the predicted posterior model probabilities, given the data and the approximator.

pred_models = approximator.predict(conditions=df, probs=True)

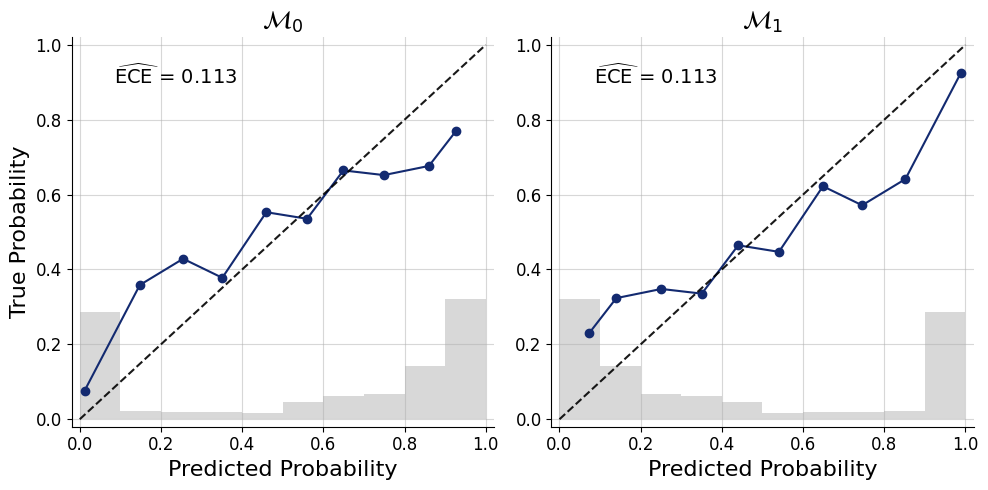

We can inspect the calibration of posterior model probabilities.

f = bf.diagnostics.plots.mc_calibration(

pred_models=pred_models,

true_models=df["model_indices"],

model_names=[r"$\mathcal{M}_0$",r"$\mathcal{M}_1$"],

)

INFO:matplotlib.mathtext:Substituting symbol M from STIXNonUnicode

INFO:matplotlib.mathtext:Substituting symbol M from STIXNonUnicode

INFO:matplotlib.mathtext:Substituting symbol M from STIXNonUnicode

INFO:matplotlib.mathtext:Substituting symbol M from STIXNonUnicode

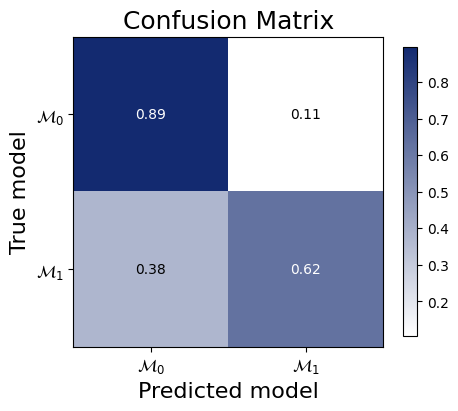

And the confusion matrix to inspect how often we would make an accurate decision based on picking the model with the highest posterior probability.

f = bf.diagnostics.plots.mc_confusion_matrix(

pred_models=pred_models,

true_models=df["model_indices"],

model_names=[r"$\mathcal{M}_0$", r"$\mathcal{M}_1$"],

normalize="true",

)

INFO:matplotlib.mathtext:Substituting symbol M from STIXNonUnicode

INFO:matplotlib.mathtext:Substituting symbol M from STIXNonUnicode

INFO:matplotlib.mathtext:Substituting symbol M from STIXNonUnicode

INFO:matplotlib.mathtext:Substituting symbol M from STIXNonUnicode

7.5. Saving and Loading#

from pathlib import Path

# Recommended - full serialization (checkpoints folder must exist)

filepath = Path("checkpoints") / "ttest_classifier.keras"

filepath.parent.mkdir(exist_ok=True)

approximator.save(filepath=filepath)

# Load approximator

approximator = keras.saving.load_model(filepath)

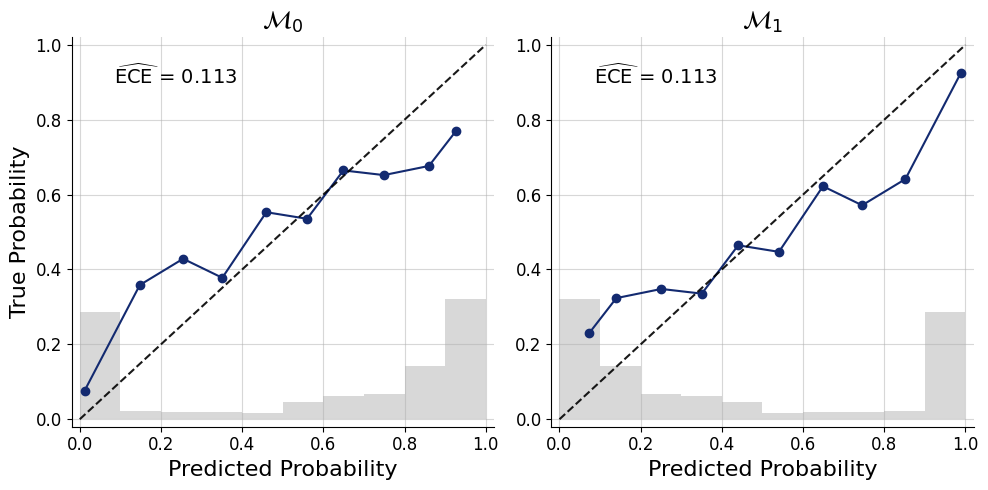

All the usual methods continue to work on the loaded approximator. For example:

pred_models = approximator.predict(conditions=df, probs=True)

f = bf.diagnostics.plots.mc_calibration(

pred_models=pred_models,

true_models=df["model_indices"],

model_names=[r"$\mathcal{M}_0$",r"$\mathcal{M}_1$"],

)

INFO:matplotlib.mathtext:Substituting symbol M from STIXNonUnicode

INFO:matplotlib.mathtext:Substituting symbol M from STIXNonUnicode

INFO:matplotlib.mathtext:Substituting symbol M from STIXNonUnicode

INFO:matplotlib.mathtext:Substituting symbol M from STIXNonUnicode